OpenAI launched GPT-4o, a major leap forward in AI! 🤖💡 This new model integrates text, voice, and vision for a seamless and dynamic user experience. Imagine real-time insights on live sports games—rules, stats, and more!

🗣️ Enhanced Voice & Vision: GPT-4o understands tone, multiple speakers, and background noises better than ever. It excels in visual understanding, making it perfect for analyzing images, charts, and creative projects.

🌐 Major New Features for Free Users:

- Web-Integrated Responses: Get accurate, up-to-date answers.

- Data Analysis & Chart Creation: Perfect for reports and presentations.

- Image Understanding: Receive intelligent critiques on your photos.

- File Uploads: Get help with document summarization and content creation.

🎉 Exciting Announcements:

- Custom Chatbots: Free ChatGPT users now have access to custom chatbots for the first time!

- New Efficient Model: GPT-4o powers both free and paid versions, offering a more efficient and versatile experience.

- Multimodal Capabilities: Analyze images, videos, and speech with GPT-4o's multimodal design.

- Human-like Voice: The new ChatGPT Voice is incredibly human-like, enhancing interactive experiences.

- Desktop App: Launching soon with voice and vision capabilities for a more immersive experience.

- Gradual Rollout: All these features are launching gradually over the coming weeks!

⚡ This model is twice as fast, costs less, and allows for more intensive use.

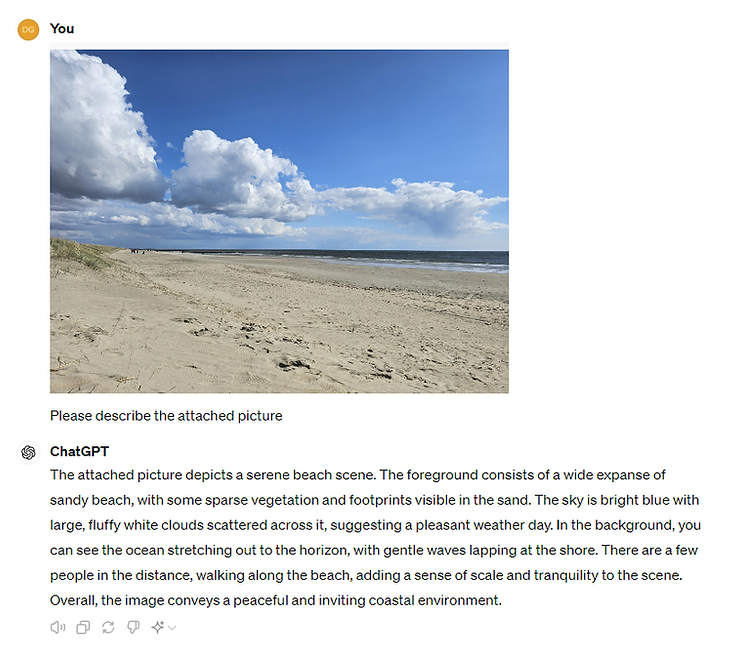

Check out my experiment with its image recognition at the end of this post!

🔗 Dive into a world where AI meets real-time sports analysis!

I asked the new model GPT-4o to describe my beach picture taken in Denmark this year, and the results are impressive!